In any organisation where multiple systems exist it is inevitable that initially many discrepancies...

Multiple sources of truth

Digitalisation strategies have created a fallacy that is often raised - single source of truth - while conceptually appealing this is challenging at best and often not practical in reality.

Any moderately complex asset in a business inevitably needs multiple tools to successfully operate, finance, procurement, engineering design, engineering analysis, maintenance management, control systems, logging, safety and regulatory tools, inspection solutions.

These tools tend to be built around operational areas, such as a CMMS for maintenance, a system for Procurement, a solution for finance etc.

All of these solutions contain a mix of domain specific content and duplicated content. In many cases the overlap of content between systems is very significant, but in others may be smaller.

A complete picture of an asset can only be achieved by looking across all systems - of course where multiple systems exist with duplicate data there is the likelihood or even certainty that discrepancies arise.

Sadly no single solution exists that can meet all requirements.

The reality is that there will always be multiple tools used - multiple sources of truth - which one is used by any individual will depend on their role and objectives.

Multiple approaches have been tried in the past two of the biggest have been

- Master data management: Relies on building a single central repository of key entities needed in the business, eliminating redundancy and duplication then integrating all of the applications back to that 'single source of truth.' This creates a central hub and connections to individual systems.

- Direct Integration: Integration tools can be applied on their own to link all the different solutions together to try and align data between systems. This creates a complex network that is often expensive to build and maintain and then are fragile in operation.

A critical success factor for these approaches is the need for a single semantic model of all data, and is made continuously harder with acquisitions or divestments of assets which will inevitably have data in different formats and developed to different standards.

The challenge is then made harder again by projects which result in change to the underlying data, created in different tools, often with multiple projects running concurrently which are then complex to apply consistently at the close of the project.

Both approaches require significant investment, a large IT project and much time. Of course while the work proceeds on integration the asset is being used and undergoing continuous change.

Aligning data not integrating systems

If multiple systems are a reality, then is there a better way to align the data in those systems and continuously improve data confidence?

The challenge becomes how to easily identify differences between the multiple sources of truth, resolve those differences and then keep them aligned affordably, while accommodating the inevitability of change within existing assets and the evolution of the portfolio of assets.

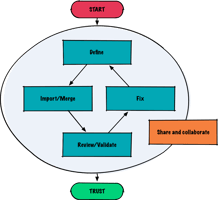

LivingTWIN takes a new approach - providing a digital workface that lets an individual user aggregate information from multiple data sources, highlight discrepancies, raise issues and track actions to resolve those issues back in the original source systems. On completion of those tasks the aggregate can be queried to demonstrate closure of the issues and show ongoing improvement in the data.

Because the issue is resolved in the source system, not just in a separate 'twin', the actual data that is used for decision making across your organisation is corrected. This quickly improves the quality of data and trust in that data by all stakeholders - delivering business improvements.